SignAll -the New Sign Language Translation Platform

SignAll has been working for years to make accurate, real-time machine translation of ASL a reality and, accordingly to Business Wire, has developed the world’s first automated sign language translator. The goal with the technology is to build a bridge to connect the deaf and hearing worlds. Providing full accessibility for deaf people is a very ambitious aspiration that was not supported by modern technology until now.

It’s multi-channel communication; it’s really not just about shapes or hand movements. If you really want to translate sign language, you need to track the entire upper body and facial expressions — that makes the computer vision part very challenging. – CEO Zsolt Robotka

Most people take it for granted that they can communicate on their first language, in their home country. However, the first language of people born deaf is sign language – English is only their second language. SignAll’s aim is to enable deaf people to communicate on their first language, American Sign Language.

At the present, SignAll utilizes 3 webcams, a depth sensor and a PC. The depth sensor is placed in front of the sign language user at chest height and the cameras are placed around them. This allows the shape and the path of the hands and gestures to be tracked continuously. The PC syncs up and processes the images in real-time, which are then transformed into grammatically correct, fully formed sentences by a natural language processing module. This enables communication by making sign language understandable to everyone.

According to TechCrunch, the setup right now uses a Kinect 2 more or less at center and three RGB cameras positioned a foot or two out. The system must reconfigure itself for each new user, since just as everyone speaks a bit differently, all ASL users sign differently.

“We need this complex configuration because then we can work around the lack of resolution, both time and spatial (i.e. refresh rate and number of pixels), by having different points of view,” said Márton Kajtár, chief R&D officer, for TechCrunch. “You can have quite complex finger configurations, and the traditional methods of skeletonizing the hand don’t work because they occlude each other. So we’re using the side cameras to resolve occlusion.”

Facial expressions and slight variations in gestures also inform what is being said, for example adding emotion or indicating a direction. And then there’s the fact that sign language is fundamentally different from English or any other common spoken language.

SignAll’s first public pilot of the system, at Gallaudet University.

Self-driving cars. When will you buy yours?

Technology never ceases to amaze us and while some of us are always looking for the next trend and interesting thing going on, we find it harder and harder to know what is here to stick and impress. And more than that. What is the newest invention that will for sure be in our lives many years to come from now and will have a huge impact in our lives. Still, but all accounts so far and by all specialists’ opinions, we do have a winner. The driverless cars, may have been once a subject of science fiction, but nowadays the question is no longer whether they will replace manually driven cars, but how quickly they’ll take over.

Self-driving cars are safer. They don’t get sleepy or distracted, they don’t have blind spots, and there is nothing on their “minds” except getting safely from point A to point B. They look amazing and are so much smarter than anything we could image, so how not to get interested in the subject? One of the freshest piece of news is that Samsung is stepping up its plans for self-driving cars to rival former Google project Waymo, Uber and Apple, bringing the key players from the battle for smartphone dominance to the brave new world of autonomous vehicles. According to The Guardian, The South Korean electronics manufacturer, the world’s largest smartphone maker, has been given permission to test its self-driving cars on public roads by the South Korean ministry of land, infrastructure and transport, therefore becoming one of 20 firms given permission to test self-driving technology on public roads in South Korea. Samsung’s smartphone rival, Apple, was recently granted permission to test its long-rumoured vehicles in California.

photo: Apple car

Unlike Apple, Google and other US technology firms, which predominantly use modified Lexus SUVs for testing autonomous systems, Samsung is using fellow Korean firm Hyundai’s vehicles. The cars will be augmented with Samsung-developed advanced sensors and machine-learning systems, which Samsung hopes to be able to provide to others building vehicles, rather than build cars itself. “Samsung Electronics plans to develop algorithms, sensors and computer modules that will make a self-driving car that is reliable even in the worst weather conditions,” said a Samsung spokesperson. The South Korean business giant completed its USD 8 billion (£6.2bn) acquisition of US automotive and audio supplier Harman International in March, a move it said would help Samsung seize on the transformative opportunities autonomous vehicle technology could bring.

Another big move on the market was in March when it was announced that Intel bought Mobileye for USD 15 billion, to lead its self-driving car unit.

photo: Samsung car

According to ExtremeTech.com, Waymo, which was spun-off from Google X’s self-driving car project, has been working on a new generation of self-driving car technology based on Fiat-Chrysler Pacifica minivans. The new design looks a bit less “prototypical” than the older Google cars, but there’s still a large white hump on the top with a lidar array poking upward. These vehicles have been cruising around the streets of Phoenix for the better part of a year, and now locals can request rides in the Waymo cars. This program actually started two months ago, but it was kept under wraps and limited to only a few people. Now, Waymo is opening it up to anyone in one of the supported areas. That includes the Phoenix and the surrounding cities of Chandler, Tempe, Mesa, and Gilbert. You have to apply for access to the program, but once you’re accepted, all members of your immediate family can take advantage. Waymo also encourages those in the program to use the self-driving cars every day, as often as possible. There’s no cost for the program, but Waymo wants all the consumer feedback it can get. At this moment, Waymo is in the process of adding 500 new self-driving vehicles to its current fleet of 100. That will allow a lot more people to experience Waymo’s self-driving technology. “Rather than offering people one or two rides, the goal of this program is to give participants access to our fleet every day, at any time, to go anywhere within an area that’s about twice the size of San Francisco,” Waymo CEO John Krafcik said in a Medium post.

Google, which owns Waymo, has long been the world leader in self-driving vehicle technology. But it has had key team members depart in the last year to launch self-driving car programs at other tech firms, car companies and startups. When it comes to its technology, Google invented the Laser Illuminating Detection and Ranging – or LIDAR – used to build a 3D map and allowing the car to “see” potential hazards by bouncing a laser beam off of surfaces surrounding the car in order to accurately determine the distance and the profile of that object. The Google Car was designed to use a Velodyne 64-beam laser in order to give the on-board processor a 360-degree view by mounting the LIDAR unit to the top of the car (for unobstructed viewing) and allowing it to rotate on a custom-built base. With two sensors in the front bumper, and two in the rear, the radar units allow the car to avoid impact by sending a signal to the on-board processor to apply the brakes, or move out of the way when applicable. This technology works in conjunction with other features on the car such as inertial measurement units, gyroscopes, and a wheel encoder in order to send accurate signals to the processing unit (the brain) of the vehicle in order to better make decisions on how to avoid potential accidents.

The actual camera technology and setup on each driverless car varies, but one prototype uses cameras mounted to the exterior with slight separation in order to give an overlapping view of the car’s surroundings. This technology is not unlike the human eye which provides overlapping images to the brain before determining things like depth of field, peripheral movement, and dimensionality of objects. Each camera has a 50-degree field of view and is accurate to about 30 meters. The cameras themselves are quite useful, but much like everything else in the car they are redundant technology that would allow the car to work even if they were to malfunction. One of its most amazing features is the fact that this combination of hardware and software can see and predict the motions of cyclists and pedestrians, it can identify construction cones and roads blocked by detour signs, and deduce the intentions of traffic cops with signs. Moreover, it can handle four-way-stops, adjust its speed on the highway to keep up with traffic, and even adjust its driving to make the ride comfortable for its human payload. The software is also aware of its own blind spots, and behaves cautiously when there might be cross-traffic or a pedestrian hiding in them. And all of these aspects have been transferred to Waymo.

But, according to CNN, Waymo is not the first to offer self-driving rides to consumers. Uber launched self-driving rides for select passengers in Pittsburgh last year. And Boston-based nuTonomy offers rides in a Singapore neighborhood. All of these companies use test drivers to guarantee safety. Besides them, Toyota, Nissan, BMW, Honda, Tesla, Mercedes and Ford all have their own self-driving car projects, although none of them are considered to be as advanced. About how they are doing so far in the allowed tests, The Guardian writes here.

Nissan is using the all-electric Leaf for various stages of autonomous vehicle testing at its Advanced Engineering Center in Atsugi, Japan, and in California at the Nissan North American Silicon Valley Research Center, according to Travis Parman, director of corporate communications. Nissan has been testing the vehicles since October 2013 and the company plans to commercialize autonomous driving technology in stages, but Parman says its vehicles could have the ability to navigate busy city intersections without a driver by 2020. Moreover, Nissan and NASA just announced a five-year research and development partnership to advance autonomous vehicles and prepare for commercial application of the technology.

Taking a further look into the future and the companies’ predictions, Scott Keogh, Head of Audi America announced at the CES 2017 that an Audio that really would drive itself would be available by 2020, the same year as Toyota, while Mark Fields, Ford’s CEO announced that the company plans to offer fully self-driving vehicles by 2021. The vehicles, which will come without steering wheel and pedals, will be targeted to fleets which provide autonomous mobility services. Fields expects that it will take several years longer until Ford will sell autonomous vehicles to the public. At their annual shareholder meeting, BMW CEO Harald Krueger said that BMW will launch a self-driving electric vehicle, the BMW iNext, in 2021, while Raj Nair, Ford’s head of product development, expects that autonomous vehicles of SAE level 4 (which means that the car needs no driver but may not be capable of driving everywhere) will hit the market by 2020.

US Secretary of Transportation stated at the 2015 Frankfurt Auto show that he expects driverless cars to be in use all over the world by 2025. Therefore, the question remains: when do you plan to buy yours?

Old versus new technologies

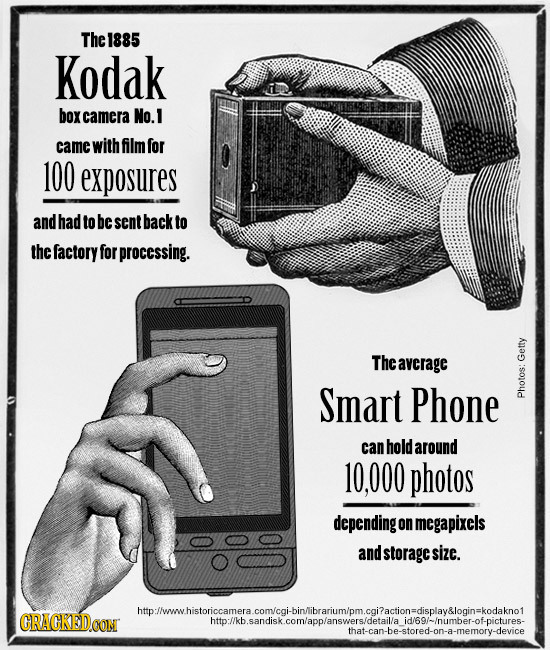

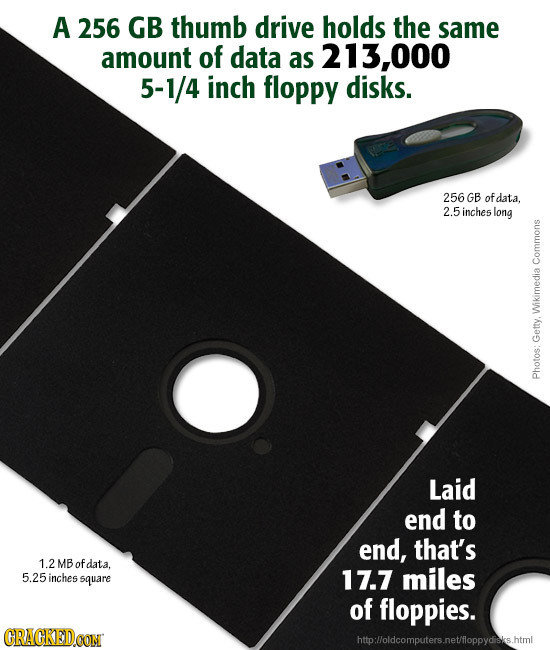

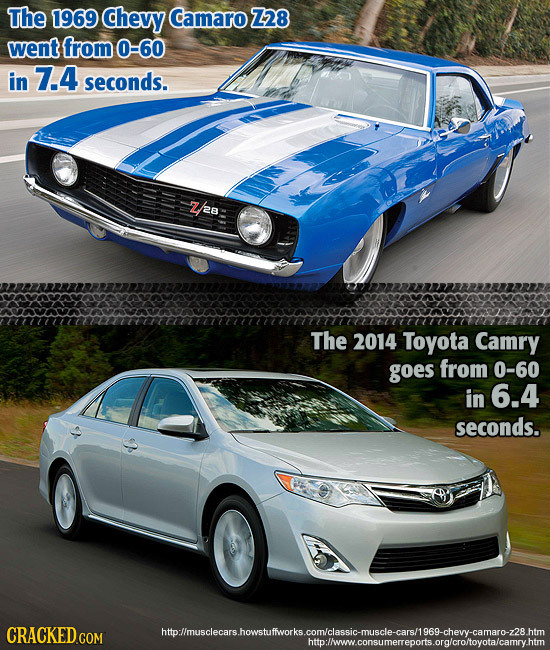

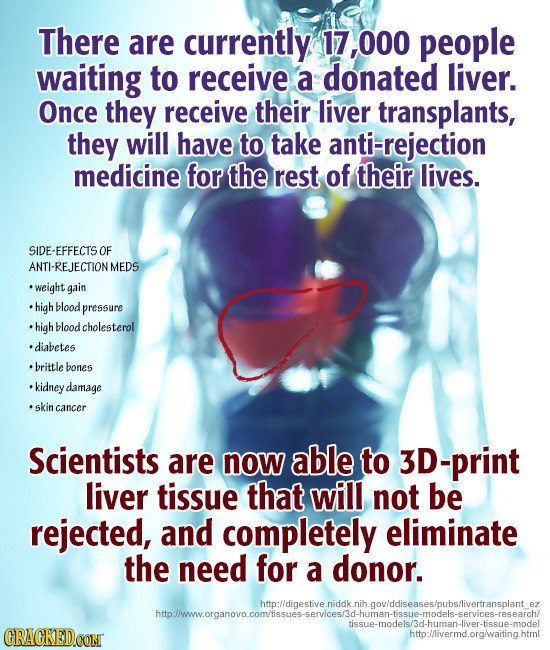

The new digital technologies are always getting people curious and excited, whether it’s the digital camera that is cheaper than developing rolls upon rolls of film, or the photo-sharing apps that – in turn — make your iPhone camera easier to use than your old digital camera. The possibility to do more, faster and shareable is beating all the good old technology that you needed to be instructed to use. If some years ago not everybody knew how to operate a photo camera and develop the pictures, having to take the rolls to specialized photo units, today anyone can take great, amazing pictures, just by using his/ her phone or a digital camera.

Still, it’s important not to forget that the new technology is based on the old one and, sometimes, people still prefer, in some cases, to use the older versions. In some cases it even became vintage and cool to use the old “ways”.

Here are some then & now infographics and pictures that will help you easily put them one next to another.

Tickets available at brandminds.com