SignAll has been working for years to make accurate, real-time machine translation of ASL a reality and, accordingly to Business Wire, has developed the world’s first automated sign language translator. The goal with the technology is to build a bridge to connect the deaf and hearing worlds. Providing full accessibility for deaf people is a very ambitious aspiration that was not supported by modern technology until now.

It’s multi-channel communication; it’s really not just about shapes or hand movements. If you really want to translate sign language, you need to track the entire upper body and facial expressions — that makes the computer vision part very challenging. – CEO Zsolt Robotka

Most people take it for granted that they can communicate on their first language, in their home country. However, the first language of people born deaf is sign language – English is only their second language. SignAll’s aim is to enable deaf people to communicate on their first language, American Sign Language.

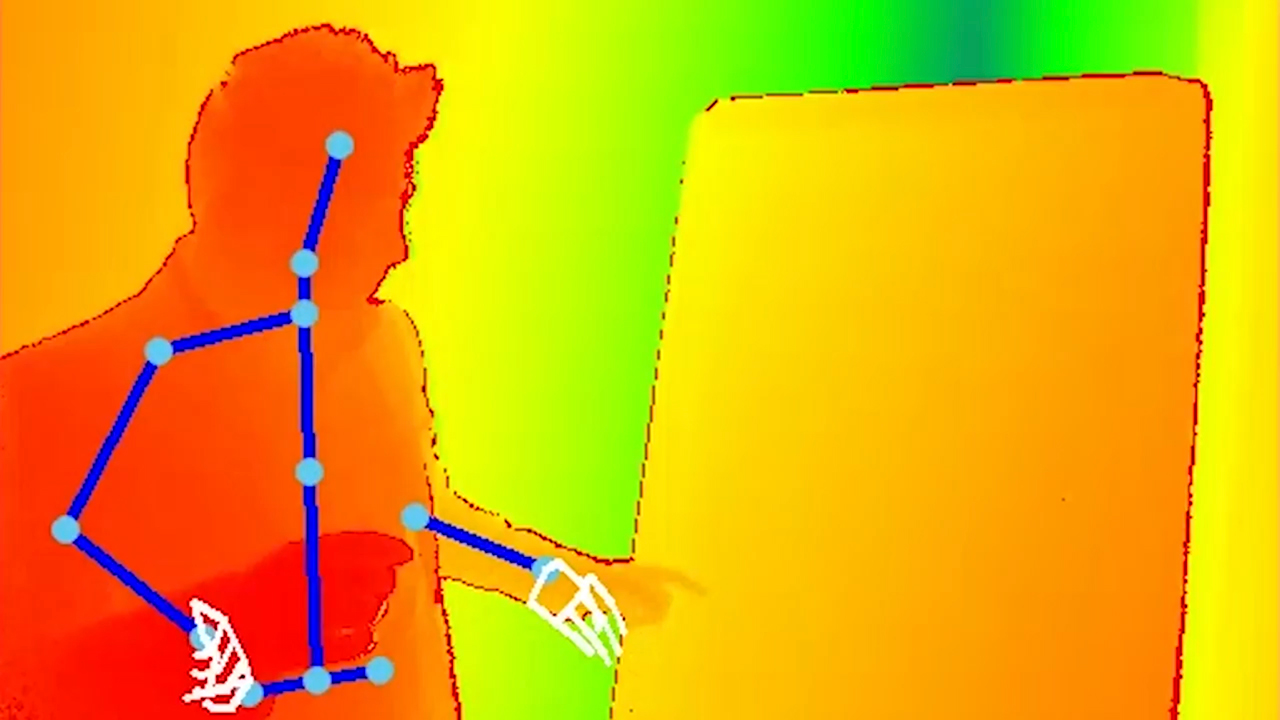

At the present, SignAll utilizes 3 webcams, a depth sensor and a PC. The depth sensor is placed in front of the sign language user at chest height and the cameras are placed around them. This allows the shape and the path of the hands and gestures to be tracked continuously. The PC syncs up and processes the images in real-time, which are then transformed into grammatically correct, fully formed sentences by a natural language processing module. This enables communication by making sign language understandable to everyone.

According to TechCrunch, the setup right now uses a Kinect 2 more or less at center and three RGB cameras positioned a foot or two out. The system must reconfigure itself for each new user, since just as everyone speaks a bit differently, all ASL users sign differently.

“We need this complex configuration because then we can work around the lack of resolution, both time and spatial (i.e. refresh rate and number of pixels), by having different points of view,” said Márton Kajtár, chief R&D officer, for TechCrunch. “You can have quite complex finger configurations, and the traditional methods of skeletonizing the hand don’t work because they occlude each other. So we’re using the side cameras to resolve occlusion.”

Facial expressions and slight variations in gestures also inform what is being said, for example adding emotion or indicating a direction. And then there’s the fact that sign language is fundamentally different from English or any other common spoken language.

SignAll’s first public pilot of the system, at Gallaudet University.